Excerpt of How To Speak Machine via Engadget

When the co-founder of Google talks about his fear of what AI can bring it isn’t only a ploy to upgrade Google’s share value, but it’s because those who have been plugged-in (literally) to the ride up Moore’s Law and its impact know something that the general population doesn’t know. When Harvard scholar Jill Lepore refers to the transformation of politics in the United States and says,

“Identity politics is market research, which has been driving American politics since the 1930s. What platforms like Facebook have done is automate it. — Jill Lepore #MLTalks”

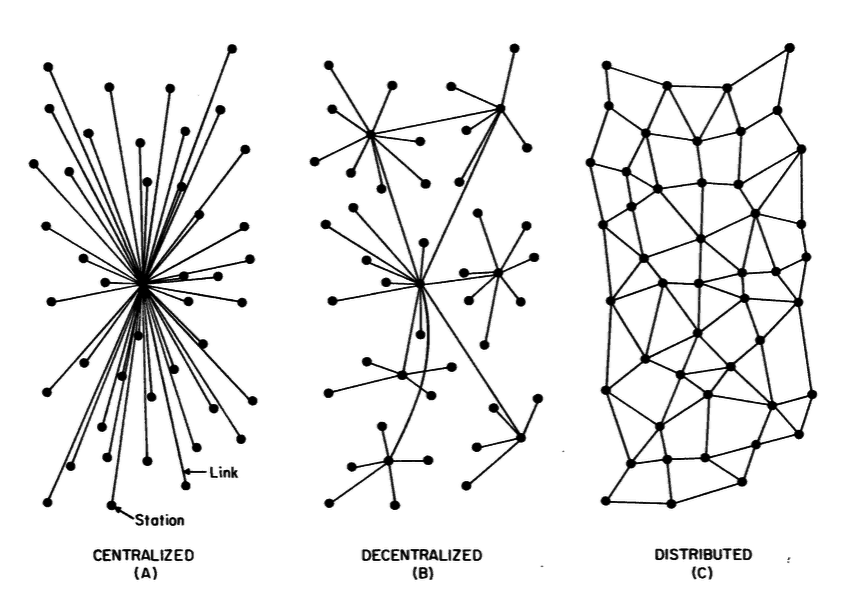

the keyword to highlight is “automate” — because machines run loops, machines get large, machines are living. It’s not you turning a crank by hand to make a plastic robot move. You now are aware that it’s all happening in ways that far transcend our physical universe. When a young man on camera says that Cambridge Analytica and Facebook together were able to affect the election in the US, we look at him and think he couldn’t do that. And there can’t be that many people in the world who could process millions of pieces of information. But you’re aware of computation now, and understand that the present is significantly (2X) different than even the recent past (just over a year ago).

Automation in Moore’s Law terms is something very different than simply a machine that washes our clothes or a vacuum that scoots about our floors picking up dirt. It’s the Mooresian scale processing network that spans every aspect of our lives that carries the sum total of our past data histories. That thought quickly moves from wonder to concern when we consider how all of that data is laden with biases, in some cases spanning centuries. What happens as a result? We get crime prediction algorithms that tell us where crime will happen — which will be sending officers to neighborhoods where crime has historically been high, i.e. poor neighborhoods. And we get crime sentencing algorithms like COMPAS that are more likely to sentence black defendants because they are based on past sentencing data and biases. So counter to popular comedian DL Hughley’s response when asked what he thought about AI and whether it needed to be fixed, his response that “You can’t teach machines racism” is unfortunately incorrect because AI has already learned about racism — from us.

Let’s recall again how the new form of artificial intelligence differs from the way it was engineered in the past. Back in the day we would define different IF-THEN patterns and mathematical formulas like in a Microsoft Excel spreadsheet to describe the relation between inputs and some outputs. When the inference was wrong, we’d look at the IF-THEN logic we encoded to see if we were missing something and/or we’d look at the mathematical formulas to see if an extra tuning was needed. But in the new world of machine intelligence, you pour data into the neural networks, and then a magic box gets created which when you give it some inputs then outputs magically appear. You’ve made a machine that is intelligent without explicitly writing any program per se. And when you have tons of data available, you can take a quantum leap with respect to what machine intelligence can do with newer deep learning algorithms with the results getting significantly better with the availability of more data Machine learning feeds off the past so if it hasn’t happened before, it can’t happen in the future — which is why if we keep perpetuating the same behavior, AI will ultimately amplify existing trends and biases. In other words, if the masters are bad, then AI will be bad. But when systems are running largely “auto pilot” like this, will media backlash lead to an “oops” getting assigned subtly as an “AI error” versus a “human error”? We must never forget that everything is a human error, and when humans start to correct those errors the machines are more likely to observe us and learn from us, too. But they’re unlikely to make those corrections on their own unless they’ve been exposed to enough cases where that has been shown to be important.

Because of machine intelligence we now live in an era, much like having children, of where your children inevitably copy what you do. Often times they can’t help growing up to become like their parents — no matter how hard they try. Furthermore, this newer process differs from writing a computer program that can take time on the scale of months and years; machine intelligence instead can glom onto an existing set of data and patterns of past behavior and simply copy and replicate it. Automation can happen instantly and without delay. It’s how a newer form of computation behaves in the wild, and increasingly without human intervention — which when automation is considered it takes on a meaning that by now you know is a big deal. So rather than imagining that all of the data that we generate gets printed out onto pieces of paper with a giant room somewhere in Google where they’re all printed out with a staff of twenty trying to cross-reference all the information, think instead of how machines run loops, get large, and are living. And a logical outcome of that computational power, an army of billions of zombie-style automatons will absorb all the information we generate so as to make sense of it and copy us. We will be the ones to blame for what they will do, for us.