Chapter 1 of How To Speak Machine, Section 3 via LinkedIn Weekend Reads

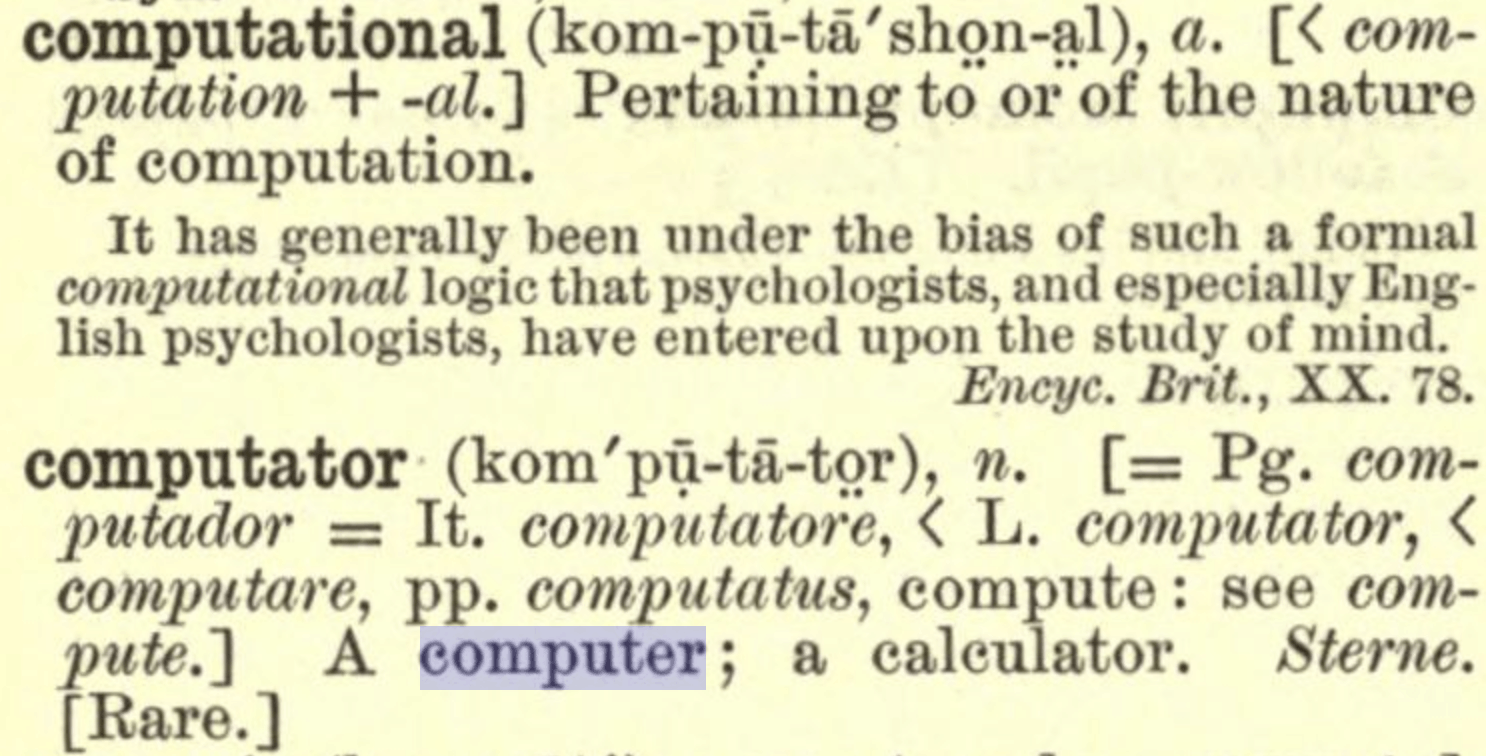

The first computers were not machines, but humans who worked with numbers—a definition that goes back to 1613, when English author Richard Braithwaite described “the best arithmetician that ever breathed” as “the truest computer of times.” A few centuries later, the 1895 Century Dictionary defined “computer” as follows:

One who computes; a reckoner; a calculator; specifically, one whose occupation is to make arithmetical calculations for mathematicians, astronomers, geodesists, etc. Also spelled computor.

At the beginning and well into the middle of the twentieth century, the word “computer” referred to a person who worked with pencil and paper. There might not have been many such human computers if the Great Depression hadn’t hit the United States. As a means to create work and stimulate the economy, the Works Progress Administration started the Mathematical Tables Project, led by mathematician Dr. Gertrude Blanch, whose objective was to employ hundreds of unskilled Americans to hand-tabulate a variety of mathematical functions over a ten-year period. These calculations were for the kinds of numbers you’d easily access today on a scientific calculator, like the natural constant ex or the trigonometric sine value for an angle, but they were instead arranged in twenty-eight massive books used to look up the calculations as expressed in precomputed, tabular form. I excitedly purchased one of these rare volumes at an auction recently, only to find that Dr. Blanch was not listed as one of the coauthors—so if conventional computation has the problem of being invisible, I realized that human computation had its share of invisibility problems too.

Try to imagine many rooms filled with hundreds of people with a penchant for doing math, all performing calculations with pencil and paper. You can imagine how bored these people must have been from time to time, and also how they would have needed breaks to eat or use the bathroom or just go home for the evening. Remember, too, that humans make mistakes sometimes— so someone who showed up to work late after partying too much the night prior might have made a miscalculation or two that day. Put most bluntly, in comparison with the computers we use today, the human computers were comparatively slow, at times inconsistent, and would make occasional mistakes that the digital computer of today would never make. But until computing machines came along to replace the human computers, the world needed to make do. That’s where Dr. Alan Turing and the Turing machine came in.

The idea for the Turing machine arose from Dr. Turing’s seminal 1936 paper “On Computable Numbers, with an Application to the Entscheidungsproblem,” which describes a way to use the basic two acts of writing and reading numbers on a long tape of paper, along with the ability to write or read from anywhere along that tape of paper, as a means to describe a working “computing machine.” The machine would be fed a state of conditions that would determine where the numbers on the tape would be written or rewritten based on what it could read—and in doing so, calculations could be performed. Although an actual computing machine could not be built with technology available back then, Turing had invented the ideas that underlie all modern computers. He claimed that such a machine could universally enable any calculation to be performed by storing the programming codes onto the processing tape itself. This is exactly how all computers work today: the memory that a computer uses to make calculations happen is also used to store the computer codes.

Sign up for the Resilience Tech Briefing with no more than 2022 characters, zero images, and all in plain-text.

As a small courtesy the a few PDF links will be sent to you soon after you sign up! —@johnmaeda

Instead of many human computers working with numbers on paper, Alan Turing envisioned a machine that could tirelessly calculate with numbers on an infinitely long strip of paper, bringing the exact same enthusiasm to doing a calculation once, or 365 times, or even a billion times—without any hesitation, rest, or complaint. How could a human computer compete with such a machine? Ten years later, the ENIAC (Electronic Numerical Integrator and Computer), built for the US Army, would be one of the first working computing machines to implement Turing’s ideas. The prevailing wisdom of the day was that the important work of the ENIAC was the creation of the hardware—that credit being owned by ENIAC inventors John Mauchly and John Presper Eckert. The perceived “lesser” act of programming the computer— performed by a primary team of human computers comprising Frances Elizabeth Snyder Holberton, Frances Bilas Spence, Ruth Lichterman Teitelbaum, Jean Jennings Bartik, Kathleen McNulty Mauchly Antonelli, and Marlyn Wescoff Meltzer—turned out to be essential and vital to the project, and yet the women computers of ENIAC were long uncredited.

As computation could be performed on subsequently more powerful computing machines than the ENIAC and human computers started to disappear, the actual act of computing gave way to writing the set of instructions for making calculations onto perforated paper cards that the machines could easily read. In the late 1950s, Dr. Grace Hopper invented the first “human readable” computer language, which made it easier for people to speak machine. The craft of writing these programmed instructions was first referred to as “software engineering” by NASA scientist Margaret Hamilton at MIT in the 1960s. Around this time, Gordon Moore, a pioneering engineer in the emerging semiconductor industry, predicted that computing power would double approximately every year, and the so-called Moore’s law was born. And a short two decades later I would be the lucky recipient of a degree at MIT in the field that Hamilton had named, but with computers having become by then many thousands of times more powerful— Moore’s exponential prediction turned out to be right.

To remain connected to the humanity that can easily be rendered invisible when typing away, expressionless, in front of a metallic box, I try to keep in mind the many people who first served the role of computing “machinery,” going back to Dr. Blanch’s era. It reminds us of the intrinsically human past we share with the machines of today. In the book of tabulations by Dr. Blanch’s team that I own—Table of Circular and Hyperbolic Tangents and Cotangents for Radian Arguments, which spans more than four hundred pages, with two hundred numbers calculated to seven decimal places—I find it humanly likely that a few of the calculations printed on those pages are incorrect due to human error. It was humans who built the Turing machines that have eradicated certain kinds of human error and who have made it possible to speak with machines in many fanciful computer languages. But it is all too easy to forget that humans err all the time—both when we are the machines and when we have made the machines err on our behalf. Computation has a shared ancestor: us. And although historically most of our mistakes made with computers have been errors in straightforward calculations, we now need to come to terms with the mistaken human assumptions that are embedded in our calculations, like the countless omissions in history of the role of women in computing. Computation is made by us, and we are now collectively responsible for its outcomes.